By Gavin Boyle

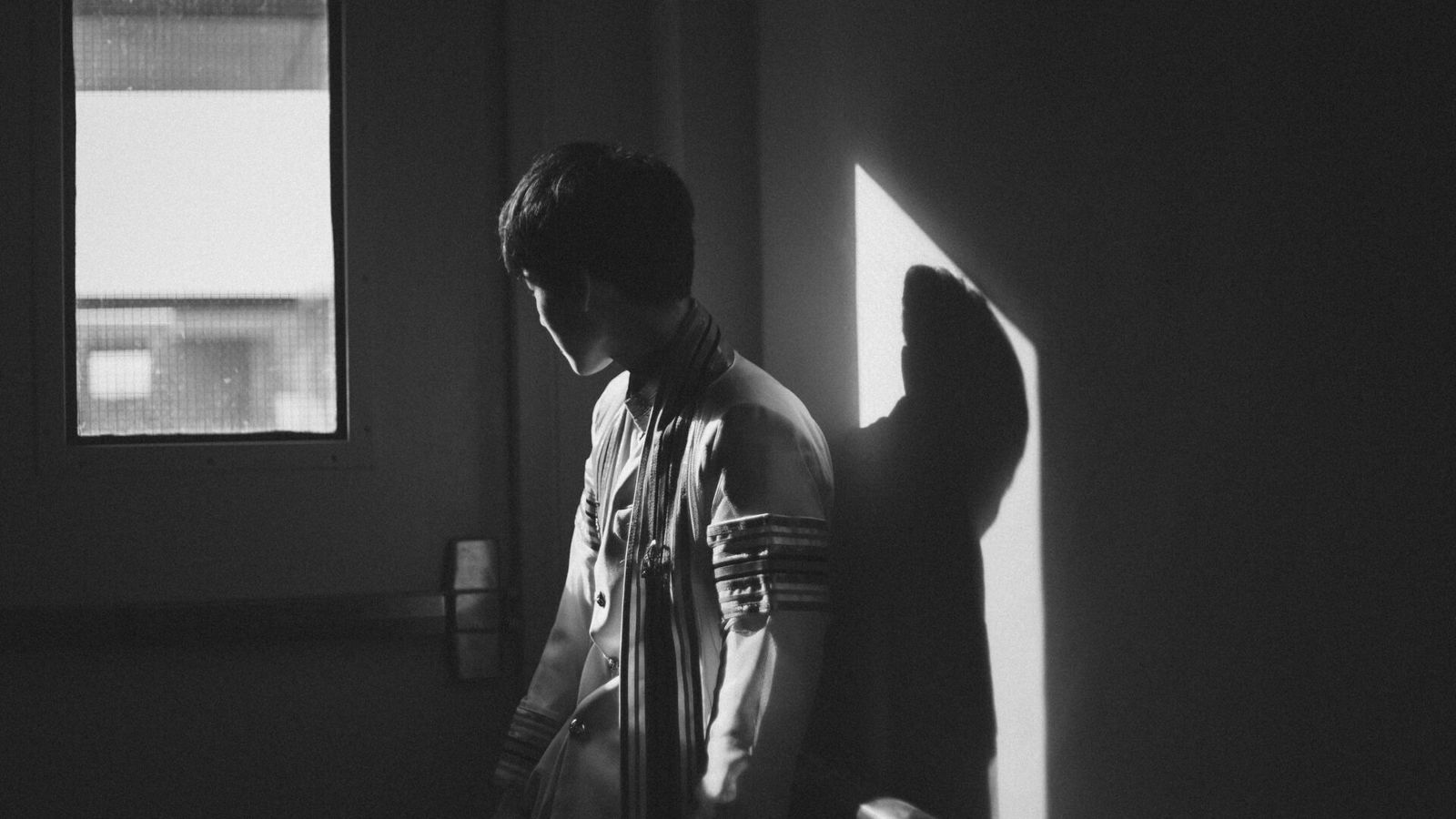

While many teens turn to AI chatbots to seek help for their mental health problems, a new study found that the technology cannot reliably offer sound advice for their struggles.

“When these normal developmental vulnerabilities encounter AI system designed to be engaging, validating and available 24/7, the combination is particularly dangerous,” said Dr. Nina Vasan, the director at Stanford Medicine’s Brainstorm Lab.

Dr. Vasan’s research found that none of the mainstream chatbots – ChatGPT, Gemini, Claude and Meta AI – responded appropriately when faced with mental health conversations researchers fed to them while posing as teens. In fact, often times, the chatbots would offer suggestions that would make the problem worse by giving diet suggestions when faced with eating disorders, or tips on how to hide scars when cutting. Furthermore, when conversations got longer, the chatbot became worse at identifying mental health emergencies and refusing to affirm users’ thoughts and feelings.

Related: Parents Give Kids Chatbot for Mental Health Advice. Is That Safe?

“They often fail to recognize when they need to step out of these roles and firmly tell teens to get help from trusted adults,” the study said.

This study is disturbing as millions of teens turn to AI for mental health advice as it is free to use and available 24/7. The consequences however, as some families have tragically discovered, can be deadly. A young boy ended his life in 2024 after speaking with an AI chatbot that was posing as a therapist but was unable to help him.

“Generative AI systems are not licensed health professionals, and they shouldn’t be allowed to present themselves as such. It’s a no-brainer to me,” said California state assembly member Mia Bonta. Bonta introduced a bill that would make it illegal for chatbots to pose as certified mental health specialists.

Some AI platforms are now introducing features which would, theoretically, make them safer for younger users, including better safeguards against engaging in conversations about mental health with users they know are younger than a certain age. However, these safeguards have yet to be proven to actually be effective.

At the same time, the American Psychological Association has argued that chatbots should not be able to pose as certified therapists to any user, regardless of their age, as the technology has no special training on how to help those facing a mental health crisis. At the very least, parents should be extremely wary of giving the technology to their kids to use in a mental health situation, or allowing their kids to use chatbots for that purpose if they catch wind of their child doing so.

Read Next: What Will Happen to AI Chatbots Providing Mental Health Advice to Teens?

Questions or comments? Please write to us here.

- Content:

- Content: